Winutils Exe Hadoop For Windows

The official release of Apache Hadoop 2.6.0 does not include the required binaries (e.g., winutils.exe) necessary to run hadoop. In order to use Hadoop on Windows, it.

Introduction This post is to help people to install and run Apache Spark in a computer with window 10 (it may also help for prior versions of Windows or even Linux and Mac OS systems), and want to try out and learn how to interact with the engine without spend too many resources. If you really want to build a serious prototype, I strongly recommend to install one of the virtual machines I mentioned in this post a couple of years ago: or to spend some money in a Hadoop distribution on the cloud. The new version of these VMs come with Spark ready to use. A few words about Apache Spark Apache Spark is making a lot of noise in the IT world as a general engine for large-scale data processing, able to run programs up to 100x faster than Hadoop MapReduce, thanks to its in-memory computing capabilities. It is possible to write Spark applications using Java, Python, Scala and R, and it comes with built-in libraries to work with structure data , graph computation , machine learning and streaming.

Spark runs on Hadoop, Mesos, in the cloud or as standalone. The latest is the case of this post. We are going to install Spark 1.6.0 as standalone in a computer with a 32-bit Windows 10 installation (my very old laptop). Let’s get started. Install or update Java For any application that uses the Java Virtual Machine is always recommended to install the appropriate java version. In this case I just updated my java version as follows: Start – All apps – Java – Check For Updates.

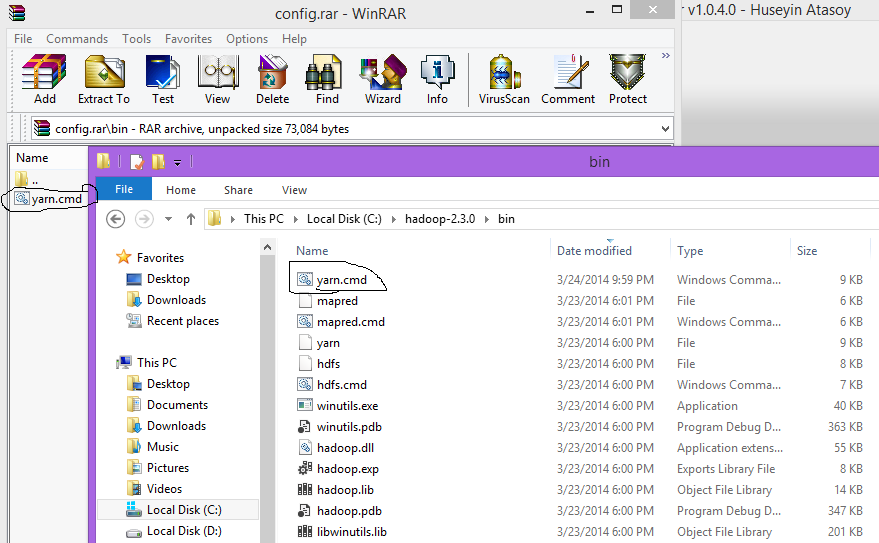

Spark Download Feel free also to download the source code and make your own build if you feel comfortable with it. Extract the files to any location in your drive with enough permissions for your user. Download winutils.exe This was the critical point for me, because I downloaded one version and did not work until I realized that there are 64-bits and 32-bits versions of this file. Here you can find them accordingly: In order to make my trip still longer, I had to install Gitto be able to download the 32-bits winutils.exe. If you know another link where we can found this file you can share it with us. (I hope you don’t get stuck in this step) Extract the folder containing the file winutils.exe to any location of your preference.

Environment Variables Configuration This is also crucial in order to run some commands without problems using the command prompt. JAVAOPTION: I set this variable to the value showed in the figure below.

I was getting Java Heap Memory problems with the default values and this fixed this problem. HADOOPHOME: even when Spark can run without Hadoop, the version I downloaded is prebuilt for Hadoop 2.6 and looks in the code for it. To fix this inconvenient I set this variable to the folder containing the winutils.exe file. JAVAHOME: usually you already set this variable when you install java but it is better to verify that exist and is correct.

SCALAHOME: the bin folder of the Scala location. If you use the standard location from the installer should be the path in the figure below. SPARKHOME: the bin folder path of where you uncompressed Spark. Environment Variables 2/2 Permissions for the folder tmp/hive I struggled a little bit with this issue. After I set everything I tried to run the spark-shell from the command line and I was getting an error, which was hard to debug. The shell tries to find the folder tmp/hive and was not able to set the SQL Context.

I look at my C drive and I found that the C: tmp hive folder was created. If not you can created by yourself and set the 777 permissions for it. In theory you can do it with the advanced sharing options of the sharing tab in the properties of the folder, but I did it in this way from the command line using winutils: Open a command prompt as administrator and type. Hi Paul, The winutils issue was my headache.

Please try to do the following: – Copy the content of the whole library and try again. – If this doesn’t help, try to build the hadoop sources by yourself, I wrote a post about it. It was also a pain in the a – If you don’t want to walk this way just let me know and I will share a link to downlod the winutils I built. I did it with Windows Server 64 bits but it should work also for Windows 10. – Last thing I can offer to you is download the hadoop binaries that this blogger offers in this post: the download link is at the very end of the post.

Kind Regards, Paul. Hi Joyishu, please open a command shell and navigate to the spark directory (i.e. Cd spark16).

This is the directory where the README.md is located and also the bin folder. Start the Scala Shell without leaving this directory. You can do that by typing bin spark-shell Once the shell has started you are still in this working directory and just need to type val textFile = sc.textFile(“README.md”) You don’t need to specify the complete location because the file is located in your working directory Please note in your example above that there is a typing error in your line with the complete path, there are 2 opening quotation marks (“”C:/Users). If you are using windows you should also consider backslashes “ ” for the path definitions. Last but not least you can find more information about the textFile funciton here: Hope this helps.

Great tutorial. Three additions: You don’t need Git installed to download a repository (you might not even need an account on github, but I’m not sure about the latter) In any case, you can download the repository as a Zip from root of the repository Select “Clone or download” “Download ZIP” Secondly, Spark should be installed in a folder path containing.no spaces., so don’t install it in “Program Files”. Thirdly, the winutils should explicitly be inside a bin folder inside the Hadoop Home folder. In my case HADOOPHOME points to C: tools WinUtils and all the binaries are inside C: tools WinUtils bin (Maybe this is also the problem @joyishu is suffering from, because I got the exact same error before fixing this). Hi Vishal, white spaces cause errors when the application try to build path from system or internal variables. I cannot tell you what is the impact for spark but the typical example is the content of the JAVAHOME environment variable. At least for this case you can use the following notation to overcome the problem: Progra1 = ‘Program Files’ Progra2 = ‘Program Files(x86)’ Please have a look here: For other cases I cannot tell you.

Try to discuss it with your system administrator or you may use another drive, i.e. Best regards, Paul. The blog has helped me lot with all installation while errors occurred but still facing an problem while installing spark on windows while launching spark-shell. Can anybody please help with solution for as soon as possible? Thanks in advance. Hi Vishal, Thanks a tons for reply.!

The solution eliminated all warnings for duplication but added new error:Hive: Failed to access metastore. This class should not accessed in runtime.

Org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient However it is resolved by removing:libthrift-0.9.3 from jar. – helped in pointing multiple Thrift loading. But the issue is still occuring: C: spark-2.2 binspark-shell Picked up JAVAOPTIONS: -Djava.net.preferIPv4Stack=true Picked up JAVAOPTIONS: -Djava.net.preferIPv4Stack=true Using Spark’s default log4j profile: org/apache/spark/log4j-defaults.properties Setting default log level to “WARN”. To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel). Hi Sneha, I think you have built your session using SBT and you have added the hive dependencies in it. So that means you have not installed the full fledged version of hive.

It will only download few dependent jars and get the job done. So when you run the session it creates a temporary folder named “metastore db” or “metastore” in your working directory i.e. Your workspace where you have created your project.

But as it is showing not able to access the “metastore” folder so that means you have not the access rights (read and write rights to that folder). So you either change the rights to that folder through command prompt(using chmod command) or change your workspace where you have full rights.

Hope it helps!! Search for:. Recent Posts.

@ @ @ @ I have 10k ideas for articles and no time but I'm open for collaboration 😉 here. Data Virtualization vs. Data Movement via @. @ A JSON to csv parser for would fit better my current needs 🤓. @ Jeje, let the kids play around. I started 26 years ago with the logo writer turtle.

Archives. Categories. Follow Blog via Email Enter your email address to follow this blog and receive notifications of new posts by email.

Winutils.exe Hadoop 2.7

Join 406 other followers. Meta. Blog Stats. 158,009 hits.